In the realm of real-time data processing and event-driven architectures, two prominent players stand out: Azure Event Hubs and Apache Kafka. Both platforms offer robust solutions for managing and processing high volumes of data streams efficiently. However, they differ in various aspects, from architecture and deployment to features and ecosystem support. Understanding these differences is crucial for organizations seeking the most suitable streaming platform for their needs. Let’s delve into a comprehensive comparison between Azure Event Hubs and Apache Kafka, but first let’s explore more about each platform.

Exploring Azure Event Hubs

Pros of Using Azure Event Hubs

Scalability and Elasticity

Azure Event Hubs excels in scalability, effortlessly handling massive volumes of data streams with the ability to scale dynamically in response to fluctuating workloads. This elasticity ensures consistent performance and reliability, even during peak traffic periods.

Fully Managed Service

One of the primary advantages of Azure Event Hubs is its status as a fully managed service. Microsoft Azure handles infrastructure provisioning, monitoring, and maintenance tasks, alleviating the burden of operational overhead for users. This allows organizations to focus on application development and innovation rather than infrastructure management.

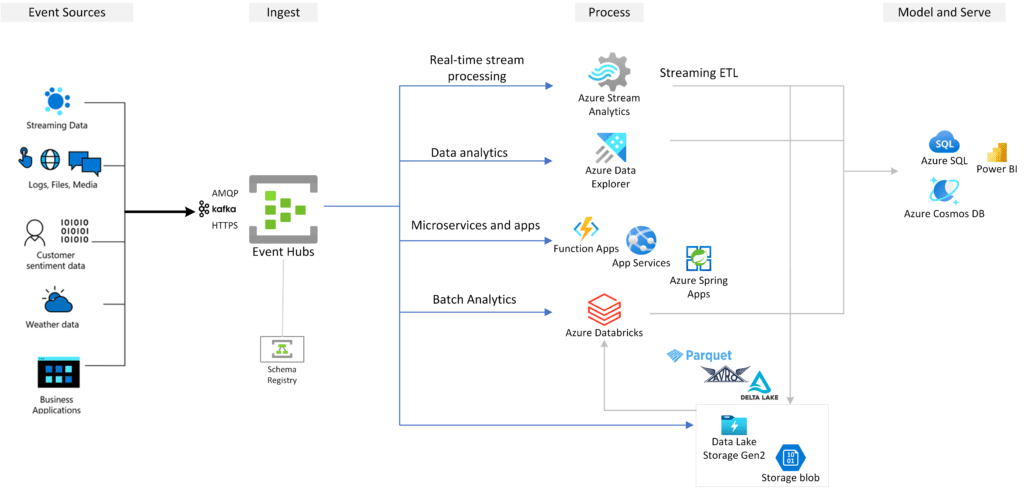

Seamless Integration with Azure Ecosystem

Azure Event Hubs seamlessly integrates with other Azure services, forming a cohesive ecosystem for building cloud-native applications. Integration with services like Azure Functions, Azure Stream Analytics, and Azure Logic Apps enables streamlined development, deployment, and orchestration of data processing workflows.

Security and Compliance

Azure Event Hubs prioritizes security and compliance, offering robust features to safeguard data integrity and confidentiality. These include built-in encryption at rest and in transit, Azure Active Directory integration for authentication, and Role-Based Access Control (RBAC) for granular access control. This ensures that sensitive data remains protected and compliant with regulatory requirements.

Cost Efficiency

Azure Event Hubs follows a consumption-based pricing model, allowing users to pay only for the resources they consume. This pay-as-you-go pricing model, coupled with the ability to scale resources based on actual usage, promotes cost efficiency and optimization. Organizations can align their expenses with their specific data processing needs, avoiding over-provisioning and unnecessary expenditures.

Cons of Using Azure Event Hubs

Vendor Lock-In

While Azure Event Hubs offers seamless integration within the Azure ecosystem, it may lead to vendor lock-in for organizations heavily invested in Microsoft Azure services. This dependence on a single cloud provider may limit flexibility in adopting alternative solutions or migrating to other platforms in the future.

Limited Compatibility

While Azure Event Hubs supports various client libraries and protocols, it may have limited compatibility with non-Azure systems compared to more universal solutions like Apache Kafka. This could pose challenges for organizations operating in heterogeneous environments or requiring interoperability with third-party systems.

Less Flexibility in Configuration

As a fully managed service, Azure Event Hubs may offer less flexibility in configuration compared to self-hosted solutions like Apache Kafka. Organizations requiring fine-grained control over configurations and optimizations may find the managed nature of Azure Event Hubs restrictive in certain scenarios.

Latency Concerns

While Azure Event Hubs provides low latency and high throughput for most use cases, organizations with stringent latency requirements may encounter challenges. In scenarios demanding ultra-low latency, alternative solutions tailored for real-time processing may be more suitable than Azure Event Hubs.

Data Sovereignty and Compliance Considerations

Organizations operating in regions with strict data sovereignty requirements must consider data residency and compliance implications when using Azure Event Hubs. Data may be stored in Azure data centers located in specific geographic regions, potentially raising concerns related to regulatory compliance and data sovereignty.

Exploring Apache Kafka

Pros of Using Apache Kafka

Scalability and Fault Tolerance

Apache Kafka’s distributed architecture enables seamless scalability by allowing horizontal scaling across multiple brokers. Its partitioning mechanism ensures fault tolerance and high availability, making it well-suited for handling massive volumes of data streams without compromising performance.

High Throughput and Low Latency

Kafka excels in achieving high throughput and low latency, making it ideal for use cases requiring real-time data processing and analytics. Its efficient storage and retrieval mechanisms, coupled with features like batching and compression, contribute to its exceptional performance characteristics.

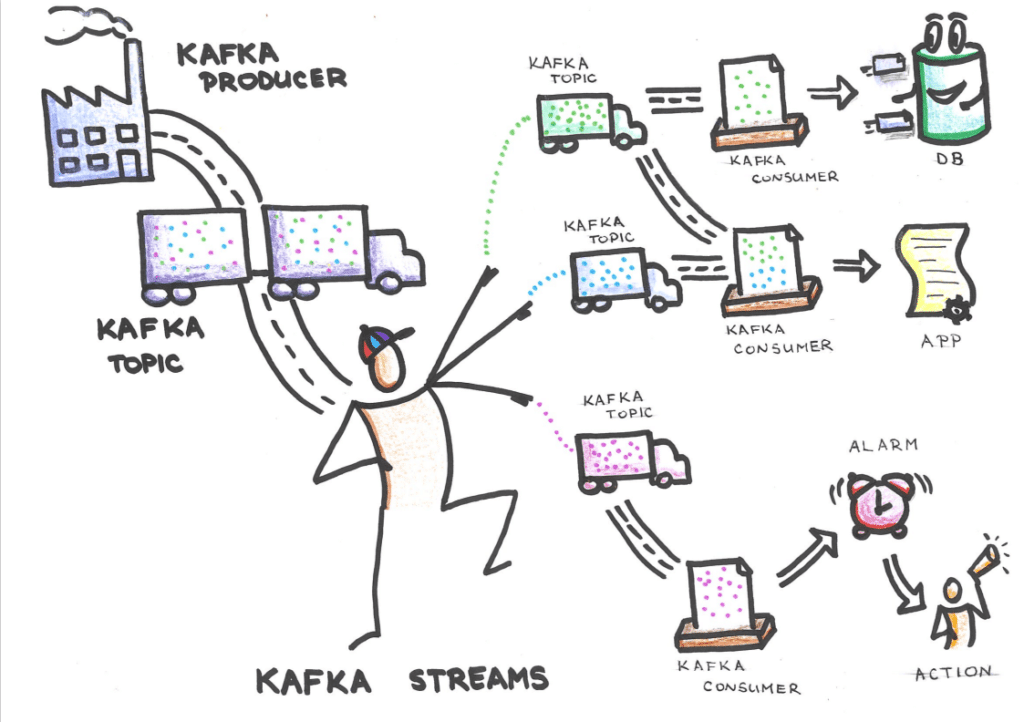

Versatile Ecosystem

Kafka boasts a vibrant ecosystem with support for various programming languages, connectors, and frameworks. Integration with popular tools like Apache Spark, Apache Flink, and Apache Storm extends its capabilities, enabling seamless data processing, analytics, and integration with existing systems.

Flexibility and Customization

Apache Kafka offers unparalleled flexibility and customization options, allowing users to tailor configurations to suit their specific requirements. From partitioning strategies to replication policies, Kafka provides fine-grained control over various aspects of data management, enabling organizations to optimize performance and resource utilization.

Community Support and Innovation

Backed by a robust community of developers and contributors, Apache Kafka undergoes continuous innovation and improvement. Regular updates and enhancements ensure that Kafka remains at the forefront of streaming technology, addressing emerging challenges and evolving user needs effectively.

Continue reading: Real-time Analytics with Database Streaming Services: Unleashing Data Insights

Cons of Using Apache Kafka

Complexity of Deployment and Management

Deploying and managing Apache Kafka clusters can be complex and resource-intensive, particularly for organizations lacking expertise in distributed systems and infrastructure management. Configuring and fine-tuning Kafka clusters require careful consideration of factors like hardware specifications, network configurations, and storage requirements.

Operational Overhead

While Kafka offers unparalleled flexibility, this flexibility comes at the cost of increased operational overhead. Organizations must invest time and resources in monitoring, maintenance, and troubleshooting tasks to ensure the smooth operation of Kafka clusters, especially in large-scale production environments.

Storage and Retention Costs

Storing and retaining data in Kafka can incur significant costs, especially for organizations dealing with large volumes of data streams over extended periods. Balancing the trade-off between data retention policies and storage costs requires careful consideration of factors like compliance requirements, data access patterns, and budget constraints.

Data Loss Risk

In certain scenarios, Apache Kafka may pose a risk of data loss, especially during hardware failures or network partitions. While Kafka offers mechanisms like replication and retention policies to mitigate this risk, organizations must implement robust disaster recovery strategies to safeguard against potential data loss incidents.

Learning Curve

Mastering Apache Kafka requires a steep learning curve, particularly for users unfamiliar with distributed systems and stream processing concepts. Organizations must invest in training and skill development initiatives to empower their teams with the knowledge and expertise needed to leverage Kafka effectively.

Key differences between Azure Event Hubs and Apache Kafka

| Azure Event Hubs | Apache Kafka | |

|---|---|---|

| Architecture | Event Hubs is a managed event streaming platform provided by Microsoft Azure. It is built on a partitioned consumer pattern, where incoming data is distributed across multiple partitions within an Event Hub. This architecture ensures scalability and high throughput, with Azure managing the underlying infrastructure. | Kafka is an open source distributed streaming platform known for its durable, fault-tolerant, and high-performance architecture. Kafka uses a partitioned log structure, where data is stored in topics partitioned across multiple servers (brokers). It offers high availability and horizontal scalability, allowing seamless handling of massive data streams. |

| Deployment | As a managed service on Azure, deploying Event Hubs is straightforward. Users can provision Event Hubs instances through the Azure portal or programmatically via APIs. Azure takes care of infrastructure provisioning, scaling, and maintenance, allowing developers to focus on application logic. | Kafka is highly flexible in terms of deployment options. It can be deployed on-premises, on cloud platforms like Azure, AWS, or Google Cloud, or as a fully managed service through platforms like Confluent Cloud. However, setting up and managing Kafka clusters require more operational overhead compared to a managed service like Event Hubs. |

| Integration | Being part of the Azure ecosystem, Event Hubs seamlessly integrates with other Azure services such as Azure Functions, Azure Stream Analytics, and Azure Data Lake Storage. It also offers native support for protocols like AMQP and HTTPS, making it compatible with a wide range of client libraries and frameworks. | Kafka boasts a rich ecosystem with a vast array of connectors, libraries, and third-party tools. It supports various programming languages and frameworks, making it highly extensible and adaptable to diverse use cases. Kafka Connect allows easy integration with external systems, while Kafka Streams enables building complex stream processing applications. |

| Security | Event Hubs provides robust security features, including Azure Active Directory integration for authentication and role-based access control (RBAC) for fine-grained authorization. Data at rest and in transit is encrypted using industry-standard protocols, ensuring end-to-end security. | Kafka offers configurable security mechanisms such as SSL/TLS encryption, SASL authentication, and access control lists (ACLs) for authorization. While Kafka provides granular control over security settings, ensuring proper configuration and management is the responsibility of the users or administrators. |

| Performance | Event Hubs excels in auto-scaling capabilities, dynamically adjusting resources based on incoming workload. It can handle millions of events per second with low latency, making it ideal for applications requiring elastic scalability and high throughput. | Kafka’s architecture is designed for horizontal scalability and high performance. By adding more brokers and partitions, Kafka clusters can scale linearly to handle increasing data volumes. Kafka’s high throughput and low latency make it suitable for demanding use cases like real-time analytics and data processing pipelines. |

Conclusion

In conclusion, both Azure Event Hubs and Apache Kafka offer robust solutions for building scalable, real-time data streaming applications. The choice between the two depends on factors such as deployment preferences, ecosystem requirements, security considerations, and specific use case demands. Organizations must evaluate these factors carefully to determine which platform best aligns with their needs and objectives in the realm of event-driven architectures and real-time data processing.